Difference between revisions of "How Did Artificial Intelligence Develop"

(→Early Developments) |

m (Admin moved page How Did Artificial Intelligence Develop? to How Did Artificial Intelligence Develop) |

||

| (24 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | Artificial intelligence (AI) is increasingly becoming pervasive in our world. Whether it is on social media application, through facial recognition, or even using our credit cards and online security, we are increasingly living in a world where AI is critical for modern life. We have heard warnings about AI taking jobs away or even threatening humanity. Nevertheless, the history of AI is linked not only with the history of computing but | + | [[File:Leibnitzrechenmaschine.jpg|thumb|left|Figure 1. The stepped reckoner was an early machine that could conduct basic calculations. Others followed in the 18th and early 19th centuries, but most only conducted simple computing tasks. ]]__NOTOC__ |

| + | Artificial intelligence (AI) is increasingly becoming pervasive in our world. Whether it is on social media application, through facial recognition, or even using our credit cards and online security, we are increasingly living in a world where AI is critical for modern life. We have heard warnings about AI taking jobs away or even threatening humanity. Nevertheless, the history of AI is linked not only with the history of computing but mathematical innovations made over the 2 millennia. | ||

| − | ==Early Developments== | + | ====Early Developments==== |

| + | The early history of AI can be traced to the intellectual foundations developed in the mid to late 1st millennium BCE. During this time, in Greece, India, China, Babylonia, and perhaps elsewhere philosophers and early mathematicians began conceptualizing artificial devices that can learn and perform tasks and calculations. Both Aristotle and Euclid reasons that through syllogism, that is a deductive logic-based argument, a mechanical device can be taught to perform given tasks. If a given statement was known or understood, that something created can learn or determine how to derive a conclusion. Effectively, logic can be taught to artificial devices. | ||

| − | + | While such philosophers reasoned this possibility, they understood that capabilities to allow this was not as easy, even if they reasoned it was possible. Al-Khwarizmi, in the 8th and 9th centuries CE, and who's named became the basis for the term <i>algorithm</i>, developed rules and foundations in what became algebra. He derived linear and quadratic equations during his time in Baghdad as the lead mathematician and astronomer in the House of Wisdom, devised that many calculations could be automated through a mechanical device. The Spanish philosopher Ramon Llull also developed the idea machines could perform simple logical tasks that can be repeated and produced so that tasks could be accomplished in an automated way.<ref>For more intellectual foundations for AI, see: O’Regan, Gerard. 2008. <i>A Brief History of Computing</i>. London: Springer.</ref> | |

| − | + | Gottfried Wilhelm Leibniz developed these ideas as he was, along with Isaac Newton, laying the foundations to what became modern calculus in the 17th century. Taking ideas from Llull, and working with engineers, Wilhem was able to help develop a basic machine that can accomplish simple calculations, or what became a sort of calculator (Figure 1). The machine was able to add, subtract, multiply, and divide. This became known as the stepped reckoner, a mechanical device that through changes in gears within the device was able to conduct basic calculations. Thomas Hobbes and René Descartes also saw that logic and mathematical reasoning could be used to automatically determine solutions for problems if a given position proved true or not. They also theorized that an algorithmic, automated approach could potentially be able to determine arguments and determine the validity of an argument using reason or mathematical logic. | |

| + | Key developments during this time was a physical symbol system, that became also the basis for mathematical symbols used in algorithmic presentation today, developed. This provided the mathematical and logic foundations for presenting algorithms, along with a way to standardize their expression, that later developed AI's presentation in discussing theoretical insight.<ref>For more on the early history of algorithms and mathematical logic that created concepts of AI, see: Nilsson, Nils J. 2010. <i>The Quest for Artificial Intelligence: A History of Ideas and Achievements</i>. Cambridge ; New York: Cambridge University Press.</ref> | ||

| + | {{Mediawiki:TabletAd1}} | ||

| − | == | + | ====Later Innovation==== |

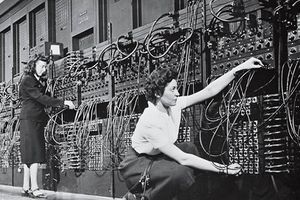

| + | [[File:02102016 ENIAC programmers LA.2e16d0ba.fill-735x490.jpg|thumb|left|Figure 2. The ENIAC machine, built in the United States, in the 1940s was used to conduct numeric calculations that could help crack codes.]] | ||

| + | Ada Lovelace, in the early 19th century, had realized that machines, that she and others called Analytical Engines, could be programmed to conduct more than simple calculations, such as that demonstrated by Leibniz in the 17th century. Lovelace is often credited with the first code written, through an algorithm she created to help artificially create musical notes. | ||

| + | In the late 19th century and early 20th century, more developments in mathematics allowed more intellectual foundations for AI to develop. Gottlob Frege and George Boole further developed mathematical logic. This eventually led to Alfred North Whitehead and Bertrand Russell writing a three-volume work with one of those volumes in 1913 formally arguing for formal, logic-based solutions for mathematical problems. Kurt Gödel demonstrated there was an incompleteness informal logic, but that mathematical reasoning can be mechanized. | ||

| − | + | Effectively, this challenged mathematicians and engineers to devise more complex machines that can utilize logic reasoning to derive answers to questions. Perhaps the most critical discovery to what became the foundation of computing and AI was the development of the Turing machine by Alan Turing in 1936. The abstract machine manipulates mathematical symbols, such as 0 and 1, where a solution could be derived using simple rules. The device could theoretically contain an infinite amount of tape that can be rolled and sequenced to find a solution. The description became the foundation for what would become a computing memory and a central processing unit (CPU). | |

| − | ==References== | + | The machine would have simple operations, just six, but with these simple operations, very complex processes could be derived. Today, all computers use a lot of the basic logic discussed by Turing. The process of calculation also developed into the idea of AI, as later theorists began to take ideas from a Turing machine to derive solutions for problems.<ref>For key developments in the 19th and early 20th centuries, see: Pearce, Q. L. 2011. <i>Artificial Intelligence</i>. Technology 360. Detroit: Lucent Books.</ref> |

| + | |||

| + | World War II became one of the key turning points for AI, when code-breaking machines needed to crack German and Japanese war codes. Both Turing and John von Neumann laid the foundations for code-breaking machines that would help fight the war effort but also later solve different logic problems (Figure 2). After World War II, many speculated that a sort of artificial brain could be created using computers through formal, mathematical logic. In 1956, the field of AI was developed as a sub-area of computing research. | ||

| + | |||

| + | Developments, which have continued until today, saw that neural links in the human brain could be replicated. The fields of neurology and human cognition became very influential in AI research as early AI researches used artificial neural networks and learning methods from human experiences to try to replicate forms of machine learning. Marvin Lee Minsky was among the first to build a neural network machine that used cognitive research to help design and apply the functions of the machine to replicate human learning in a machine. This work heavily utilized research conducted by Walter Pitts and Warren McCulloch, who demonstrated how artificial neural networks could allow machines to learn from experience and repeated action. Throughout the 1950s and 1960s, early AI machines were taught tasks such as how to play chess or checkers, such as using the Ferranti Mark 1 machines or the IBM 702 machines.<ref>For more on World War II and post-war developments in AI, see: Coppin, Ben. 2004. <i>Artificial Intelligence Illuminated</i>. 1st ed. Boston: Jones and Bartlett Publishers.</ref> | ||

| + | |||

| + | ====Current Trends==== | ||

| + | Today, forms of artificial neural networks are still among the most commonly used methods for machine learning techniques. So-called deep learning has become a sub-field where machines could learning a variety of tasks through the utilization of large datasets that teach a computer basic patterns with very little guiding information. In the 1960s and 1970s, the related field of robotics began to develop in earnest. The merger of AI with robotics was often led by Japanese research institutions, such as Waseda University through the WABOT project that developed an early robot. | ||

| + | |||

| + | While optimism in AI was gaining through the 1960s, by the 1970s there was a backlash and doubt that machines could replicate human thinking or even if that was desirable. One of the main problems was a lack of computational power. Machines could not be built to be powerful enough to solve sometimes easy tasks such as recognizing a face or programming a robot to move across a room without hitting something. These actions required much more powerful computational power that did not develop until the 1980s. | ||

| + | |||

| + | By then, industry began to have greater influence, rather than government funding, in shaping AI. In the 1990s and early 2000s, and since that time, AI has grown substantially. In part, this was influenced by major successes such as IBM's Deep Blue machine, which beat the first chess champion in chess in 1997. Additionally, Stanford built a car in 2005 that was able to travel across the desert for more than 130 miles by itself, initiating a lot of research that has since developed in autonomous vehicles. Other military and consumer applications, along with increased needs in cybersecurity and solving tasks quickly for firms, helped to inspire new research and funding for AI that has not abated substantially since the late 1990s. Increasing computational power and use of large, networks of machines have created this most recent wave of developments.<ref>For more on AI trends since the 1960s, see: Bhaumik, Arkapravo. 2018. <i>From AI to Robotics: Mobile, Social, and Sentient Robots</i>. Boca Raton: CRC Press, Taylor & Francis Group, CRC Press is an imprint of the Taylor & Francis Group, an informa business. </ref> | ||

| + | |||

| + | ====Summary==== | ||

| + | |||

| + | For millennia, philosophers and mathematicians had discussed how machines or devices could potentially be used to solve problems using some form of replication of thought or logic. By the 1950s and 1960s, optimism in AI had grown to the extent that people began to ponder a future where robots or machines would fully replicate human thought. It became clear that this was not going to happen quickly, as computers were not nearly powerful enough to recreate even basic thought tasks. However, as computing power has substantially increased, new forms of AI means that many complex tasks are now possible and many day-to-day interactions in our social or business life often have resulted in AI becoming a fixture in our lives. | ||

| + | {{Mediawiki:AmNative}} | ||

| + | ====References==== | ||

| + | <references/> | ||

| + | {{Contributors}} | ||

| + | |||

| + | [[Category:Wikis]] [[Category:History of Science and Technology]] | ||

Latest revision as of 23:03, 21 September 2021

Artificial intelligence (AI) is increasingly becoming pervasive in our world. Whether it is on social media application, through facial recognition, or even using our credit cards and online security, we are increasingly living in a world where AI is critical for modern life. We have heard warnings about AI taking jobs away or even threatening humanity. Nevertheless, the history of AI is linked not only with the history of computing but mathematical innovations made over the 2 millennia.

Early Developments

The early history of AI can be traced to the intellectual foundations developed in the mid to late 1st millennium BCE. During this time, in Greece, India, China, Babylonia, and perhaps elsewhere philosophers and early mathematicians began conceptualizing artificial devices that can learn and perform tasks and calculations. Both Aristotle and Euclid reasons that through syllogism, that is a deductive logic-based argument, a mechanical device can be taught to perform given tasks. If a given statement was known or understood, that something created can learn or determine how to derive a conclusion. Effectively, logic can be taught to artificial devices.

While such philosophers reasoned this possibility, they understood that capabilities to allow this was not as easy, even if they reasoned it was possible. Al-Khwarizmi, in the 8th and 9th centuries CE, and who's named became the basis for the term algorithm, developed rules and foundations in what became algebra. He derived linear and quadratic equations during his time in Baghdad as the lead mathematician and astronomer in the House of Wisdom, devised that many calculations could be automated through a mechanical device. The Spanish philosopher Ramon Llull also developed the idea machines could perform simple logical tasks that can be repeated and produced so that tasks could be accomplished in an automated way.[1]

Gottfried Wilhelm Leibniz developed these ideas as he was, along with Isaac Newton, laying the foundations to what became modern calculus in the 17th century. Taking ideas from Llull, and working with engineers, Wilhem was able to help develop a basic machine that can accomplish simple calculations, or what became a sort of calculator (Figure 1). The machine was able to add, subtract, multiply, and divide. This became known as the stepped reckoner, a mechanical device that through changes in gears within the device was able to conduct basic calculations. Thomas Hobbes and René Descartes also saw that logic and mathematical reasoning could be used to automatically determine solutions for problems if a given position proved true or not. They also theorized that an algorithmic, automated approach could potentially be able to determine arguments and determine the validity of an argument using reason or mathematical logic.

Key developments during this time was a physical symbol system, that became also the basis for mathematical symbols used in algorithmic presentation today, developed. This provided the mathematical and logic foundations for presenting algorithms, along with a way to standardize their expression, that later developed AI's presentation in discussing theoretical insight.[2]

Later Innovation

Ada Lovelace, in the early 19th century, had realized that machines, that she and others called Analytical Engines, could be programmed to conduct more than simple calculations, such as that demonstrated by Leibniz in the 17th century. Lovelace is often credited with the first code written, through an algorithm she created to help artificially create musical notes.

In the late 19th century and early 20th century, more developments in mathematics allowed more intellectual foundations for AI to develop. Gottlob Frege and George Boole further developed mathematical logic. This eventually led to Alfred North Whitehead and Bertrand Russell writing a three-volume work with one of those volumes in 1913 formally arguing for formal, logic-based solutions for mathematical problems. Kurt Gödel demonstrated there was an incompleteness informal logic, but that mathematical reasoning can be mechanized.

Effectively, this challenged mathematicians and engineers to devise more complex machines that can utilize logic reasoning to derive answers to questions. Perhaps the most critical discovery to what became the foundation of computing and AI was the development of the Turing machine by Alan Turing in 1936. The abstract machine manipulates mathematical symbols, such as 0 and 1, where a solution could be derived using simple rules. The device could theoretically contain an infinite amount of tape that can be rolled and sequenced to find a solution. The description became the foundation for what would become a computing memory and a central processing unit (CPU).

The machine would have simple operations, just six, but with these simple operations, very complex processes could be derived. Today, all computers use a lot of the basic logic discussed by Turing. The process of calculation also developed into the idea of AI, as later theorists began to take ideas from a Turing machine to derive solutions for problems.[3]

World War II became one of the key turning points for AI, when code-breaking machines needed to crack German and Japanese war codes. Both Turing and John von Neumann laid the foundations for code-breaking machines that would help fight the war effort but also later solve different logic problems (Figure 2). After World War II, many speculated that a sort of artificial brain could be created using computers through formal, mathematical logic. In 1956, the field of AI was developed as a sub-area of computing research.

Developments, which have continued until today, saw that neural links in the human brain could be replicated. The fields of neurology and human cognition became very influential in AI research as early AI researches used artificial neural networks and learning methods from human experiences to try to replicate forms of machine learning. Marvin Lee Minsky was among the first to build a neural network machine that used cognitive research to help design and apply the functions of the machine to replicate human learning in a machine. This work heavily utilized research conducted by Walter Pitts and Warren McCulloch, who demonstrated how artificial neural networks could allow machines to learn from experience and repeated action. Throughout the 1950s and 1960s, early AI machines were taught tasks such as how to play chess or checkers, such as using the Ferranti Mark 1 machines or the IBM 702 machines.[4]

Current Trends

Today, forms of artificial neural networks are still among the most commonly used methods for machine learning techniques. So-called deep learning has become a sub-field where machines could learning a variety of tasks through the utilization of large datasets that teach a computer basic patterns with very little guiding information. In the 1960s and 1970s, the related field of robotics began to develop in earnest. The merger of AI with robotics was often led by Japanese research institutions, such as Waseda University through the WABOT project that developed an early robot.

While optimism in AI was gaining through the 1960s, by the 1970s there was a backlash and doubt that machines could replicate human thinking or even if that was desirable. One of the main problems was a lack of computational power. Machines could not be built to be powerful enough to solve sometimes easy tasks such as recognizing a face or programming a robot to move across a room without hitting something. These actions required much more powerful computational power that did not develop until the 1980s.

By then, industry began to have greater influence, rather than government funding, in shaping AI. In the 1990s and early 2000s, and since that time, AI has grown substantially. In part, this was influenced by major successes such as IBM's Deep Blue machine, which beat the first chess champion in chess in 1997. Additionally, Stanford built a car in 2005 that was able to travel across the desert for more than 130 miles by itself, initiating a lot of research that has since developed in autonomous vehicles. Other military and consumer applications, along with increased needs in cybersecurity and solving tasks quickly for firms, helped to inspire new research and funding for AI that has not abated substantially since the late 1990s. Increasing computational power and use of large, networks of machines have created this most recent wave of developments.[5]

Summary

For millennia, philosophers and mathematicians had discussed how machines or devices could potentially be used to solve problems using some form of replication of thought or logic. By the 1950s and 1960s, optimism in AI had grown to the extent that people began to ponder a future where robots or machines would fully replicate human thought. It became clear that this was not going to happen quickly, as computers were not nearly powerful enough to recreate even basic thought tasks. However, as computing power has substantially increased, new forms of AI means that many complex tasks are now possible and many day-to-day interactions in our social or business life often have resulted in AI becoming a fixture in our lives.

References

- ↑ For more intellectual foundations for AI, see: O’Regan, Gerard. 2008. A Brief History of Computing. London: Springer.

- ↑ For more on the early history of algorithms and mathematical logic that created concepts of AI, see: Nilsson, Nils J. 2010. The Quest for Artificial Intelligence: A History of Ideas and Achievements. Cambridge ; New York: Cambridge University Press.

- ↑ For key developments in the 19th and early 20th centuries, see: Pearce, Q. L. 2011. Artificial Intelligence. Technology 360. Detroit: Lucent Books.

- ↑ For more on World War II and post-war developments in AI, see: Coppin, Ben. 2004. Artificial Intelligence Illuminated. 1st ed. Boston: Jones and Bartlett Publishers.

- ↑ For more on AI trends since the 1960s, see: Bhaumik, Arkapravo. 2018. From AI to Robotics: Mobile, Social, and Sentient Robots. Boca Raton: CRC Press, Taylor & Francis Group, CRC Press is an imprint of the Taylor & Francis Group, an informa business.